I recently popped into the IRC channel of a well known online music service to flag up a few errors that have been happening to their API web service. I am a big user of this service and appreciate it immensely, but simply wasn’t able to get a reliable connection to it using various apps.

I’m a big believer in improving things gradually over time and must say over the last 10 years I’ve learned a lot about running an efficient web service. While speaking with the music service guys, I suddenly became the user rather than the developer (which was kind of nice to see things from the other side!) so thought a general blog post about what I’ve learned may be useful to this site, and indeed others.

Having run sites like TheAudioDB, TheLogoDB, TheSportsDB and Movie-XML in the past I have a fair bit of experience running these large services for millions of users. We handle a good 15 million requests on peak days like Sunday from the various metadata sites, so I’ve had to improve our services to keep up with demand. The failure of Movie-XML made me appreciate how important it is to scale a website with demand quickly. The site became a huge success overnight and basically failed due to not being able to serve API requests quickly enough. These days TheMovieDB does a far better job than I did, and does it well.

These days we only use about 10% of our server resources at peak times, and hopefully this should be able to scale to 100’s of millions of API hits a day in the future before we need to look again. With TheSportsDB gaining popularity recently with the live scores features, this scaleability will be important for the future.

So here are my top tips for creating a fast web server API:

Hardware

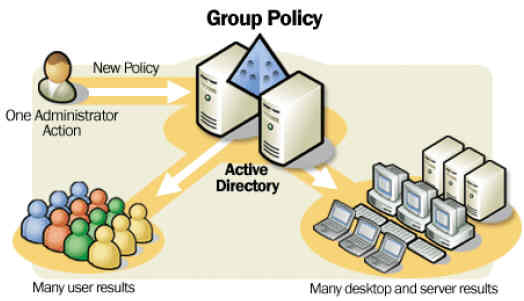

Separate the API from the front-end website on a physical level. This can mean using a dedicated server, or leveraging a cloud service, but its important to decouple the demand for your website with the demand for your web service. Your website may be doing much more complicated operations that can cause database waits that can have a knock on effect to the API.

Invest in good web server hardware. I run my own co-located equipment and as a server admin by-day, try to use the latest hardware technology. Our web server uses an Intel PCIE SSD based storage which is blazingly fast and a Xeon 6 core CPU. IO bottlenecks of traditional mechanical storage can have a huge knock on effect on CPU and server resources.

Match your development hardware to your server hardware. While you can’t always get a complete match, make sure it uses similar technology and software versions. I found that using different versions of PHP for example, caused performance problems when transferred onto the main web server.

Software

The best piece of advice I can give to anyone running a popular API is to look at the use cases of your users. Typically you will have a few, very large API users who are doing the same thing over and over on your service to fulfill a simple need. Analyze this data meticulously and improve the web-service accordingly.

Check the search functionality of your API. Analyze the metrics for how long each search takes and how accurate it needs to be. Databases offer all kinds of different search algorithms but does the web service need such accuracy? Does it need 50 results instead of 5? How does it handle duplicates? There are lots of things you can do to simplify the data returned to the point where it is far more efficient.

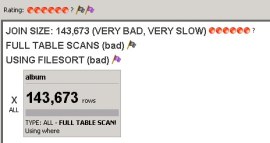

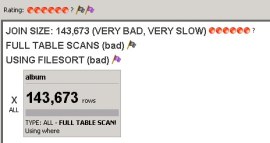

Database design is hugely important to speed and accuracy of a web service API. Look at the amount of tables, improve indexes and links between tables, and look at the amount of data that needs to be scanned. Full table lookup’s are bad, too many joins are bad, using certain sort types like filesort are bad. If you really want to take it to the extreme you can create a table specifically for the API output and simply do a single lookup of cached results. I’ve found this to be a useful tool to speed up database reads.

Transferring to JSON as a web service helped a lot as its quicker and smaller than XML, while still being universally known to developers. We depreciated our XML API about 5 years ago and at a guess it improved bandwidth and resource usage by a good few percent.

When it comes to MYSQL there are loads of different options for speeding up the database. I spent countless hours tweaking these to use the correct level of caching queries, memory usage, table types and concurrent connections. The MYSQLTuner script is useful for this but don’t just trust it, experiment! Its only by trial and error you can get this right, it depends on how your database is structured and used.

Managing usage

Lets face it, analyzing millions of log entries in a text file is no fun! Use a live SQL analyzer to monitor your API and peak times. This way you can see the slow queries in real time and adapt your code to make it more efficient. In my case, I removed a few advanced features(such as alternative artist name searches) and it made a huge improvement. You can always allow this kind of search with a special URL, just not by default. I personally use Jet Profiler for MySQL which is a great visual way of seeing which sites, tables and queries cost the most resources. Jet Profiler gives you a list of the top 10 slow queries as well as charts showing usage over time and even gives advice on how to improve the SQL. Its a great tool well worth the money but there are many other free and open source alternatives.

I know all to well as an amateur developer its easy to write something, but much harder to get into the minds of other developers. API’s tend to be static systems that the 3rd party developer has to adapt to. This is a good thing, but its worth going back to those developers and asking what they would like to see. Over the years I have added many new API methods to my sites after feedback from the user forums. Some of these new methods work far better for the 3rd party developer than previous attempts and in some cases have really improved performance as well as features.

API keys

Analyze usage constantly. Who’s using your API? What times are they using it? Why are they using it? Are any users causing problems or significant loads? Revoke API keys if necessary, or use this as a tool to force changes. Each piece of software using your API should have a specific key, larger users should change their keys every couple of years to prevent piggy backing from unauthorized apps. Its also important to give out keys for different software process even if its the same overall user. This helps track down problems when they show up and pinpoint them quickly.

If your writing data back to your API, make sure its efficient. Writing and creating indexes can take a lot of CPU in some cases. Use API tokens (essentially temporary user API keys) to achieve tracking like this and limit the user base to only those who really need it.

Upgrade the API – Over time it may become apparent that the original API doesn’t cut it and needs to be replaced. Its a great idea to version your API, so v1 for the initial release, then v2 with some extended features, v3 for performance improvements ect. Over time developers will migrate to the new API and you can also run them in parallel for a transitional period.

Documentation

Although probably unpopular with most developers, writing good documentation is key to getting a reliable API service. This is because many developers may make mistakes or take shortcuts when initially writing their software. If you have good documentation guiding them then it helps to reduce these mistakes and let them get the data they need with the minimum API calls. I’ve even gone as far as looking at the 3rd party developers code on places like github to suggest better ways of using our API’s.

Documentation isn’t just about showing your API syntax, try showing some real world guides or examples of other applications using it. Make a sandbox so the developer can quickly test the API with dummy data. I came accross a sports data api recently that limited api lookups to once an hour for some methods. Maddening for the developer just starting out!

Artwork

We serve a lot of artwork over our API and it became apparent that a huge amount of bandwidth was being consumed this way. We recently moved all our artwork to a sub domain, and linked it to the cloudflare so it could be cached using a content delivery system. This saves us a couple of terabytes a month in data fees! Best of all its currently free!

Hopefully the above helps if you are building a new API or improving a current one.